dev-resources.site

for different kinds of informations.

How to Use #SemanticKernel, Plugins – 2/N

Hi!

In the previous posts we learned how to create a simple Semantic Kernel Chat Application

- Add services to the KernelBuilder, like Chat

- Build a Kernel

- Run a prompt with the Kernel

Today we will switch a little the interaction with the AI Models, instead of a chat conversation, we will use Plugins.

Plugins

Let’s start with the PlugIn definition thanks to Microsoft Copilot:

A plugin is a unit of functionality that can be run by Semantic Kernel, an AI platform that allows you to create and orchestrate AI apps and services. Plugins can consist of both native code and requests to AI services via prompts. Plugins can interoperate with plugins in ChatGPT, Bing, and Microsoft 365, as well as other AI apps and services that follow the OpenAI plugin specification.

Plugins are composed of functions that can be exposed to AI apps and services. The functions within plugins can be orchestrated by an AI application to accomplish user requests. To enable automatic orchestration, plugins also need to provide semantic descriptions that specify the function’s input, output, and side effects. Semantic Kernel uses connectors to link the functions to the AI services that can execute them.

There are two types of functions that can be created within a plugin: prompts and native functions. Prompts are natural language requests that can be sent to AI services like ChatGPT, Bing, and Microsoft 365. Native functions are code snippets that can be written in C# or Python to manipulate data or perform other operations. Both types of functions can be annotated with semantic descriptions to help the planner choose the best functions to call.

1st Plugin: Questions

Let’s create our 1st plugin. We need to have two types of files:

- A prompt file. This file contains the natural language instructions for the AI service to execute the function.

- A config.json file that contains the semantic description of the function, its input parameters, and its execution settings.

_ Note: We can also create native functions using C# or Python code, which can be annotated with attributes to describe their behavior to the planner. This goes in future posts._

I created a folder named “Question” and added both files inside. This is the content of the config.json file.

{

"schema": 1,

"description": "Answer any question",

"execution_settings": {

"default": {

"max_tokens": 100,

"temperature": 1.0,

"top_p": 0.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0

}

}

}

And this is the prompt.

I am a highly intelligent question answering bot. If you ask me a question that is rooted in truth, I will give you the answer. If you ask me a question that is nonsense, trickery, or has no clear answer, I will respond with "Unknown, please try again".

Q: What is human life expectancy in the United States?

A: Human life expectancy in the United States is 78 years.

Q: Who was president of the United States in 1955?

A: Dwight D. Eisenhower was president of the United States in 1955.

Q: Which party did he belong to?

A: He belonged to the Republican Party.

Q: What is the square root of banana?

A: Unknown

Q: How does a telescope work?

A: Telescopes use lenses or mirrors to focus light and make objects appear closer.

Q: Where did the first humans land on the moon in 1969?

A: The first humans landed on the moon on the southwestern edge of the Sea of Tranquility.

Q: Name 3 movies about outer space.

A: Aliens, Star Wars, Apollo 13

Q: How many squigs are in a bonk?

A: Unknown

Q: {{$input}}

C# code to use our plugin

The code to use our plugin is very straigh forward:

- First we locate and load the local folder with the plugins directories.

- We run the prompts using the kernel

A complete solution will look like this:

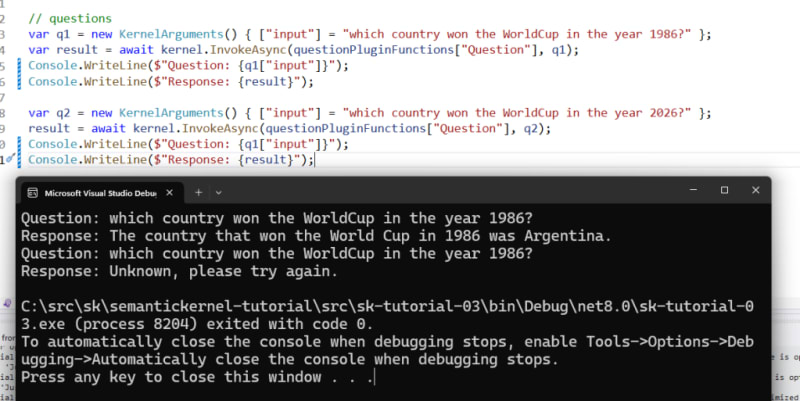

And, once executed, this is the sample output.

Our model answered the 2 questions, and because the model can’t answer a future question, it responds with the default unknow message.

Updating our prompt

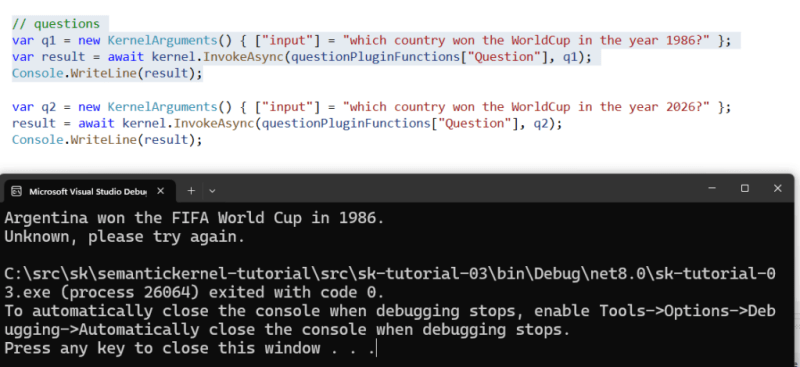

Now let’s print just the answer to the prompt. The code will look like this

// questions

var q1 = new KernelArguments() { ["input"] = "which country won the WorldCup in the year 1986?" };

var result = await kernel.InvokeAsync(questionPluginFunctions["Question"], q1);

Console.WriteLine(result);

And the output will look like this:

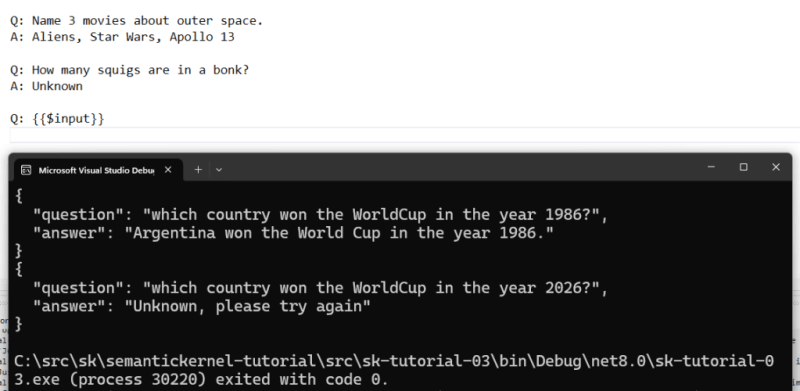

We can change our prompt to return a better response, in example in JSON format. This is the new prompt:

I am a highly intelligent question answering bot. If you ask me a question that is rooted in truth, I will give you the answer. If you ask me a question that is nonsense, trickery, or has no clear answer, I will respond with "Unknown, please try again".

Answer the question using JSON format.

example:

{

"question": "question",

"answer": "answer"

}

Sample Input

Q: What is human life expectancy in the United States?

A: Human life expectancy in the United States is 78 years.

Q: Who was president of the United States in 1955?

A: Dwight D. Eisenhower was president of the United States in 1955.

Q: Which party did he belong to?

A: He belonged to the Republican Party.

Q: What is the square root of banana?

A: Unknown

Q: How does a telescope work?

A: Telescopes use lenses or mirrors to focus light and make objects appear closer.

Q: Where did the first humans land on the moon in 1969?

A: The first humans landed on the moon on the southwestern edge of the Sea of Tranquility.

Q: Name 3 movies about outer space.

A: Aliens, Star Wars, Apollo 13

Q: How many squigs are in a bonk?

A: Unknown

Q: {{$input}}

And this is the sample output, with the response in JSON format.

Conclusion

In this post, we learned how to create and use plugins with Semantic Kernel. We created a simple question answering plugin that uses natural language prompts to request AI services. We also learned how to import and invoke the plugin functions using C# code.

In the next post, we will explore how we can do even more with plugins and Semantic Kernel.

You can find the complete source here: https://aka.ms/sktutrepo

Happy coding!

Greetings

El Bruno

More posts in my blog ElBruno.com.

More info in https://beacons.ai/elbruno

Featured ones: