dev-resources.site

for different kinds of informations.

Step-by-Step Guide: Write Your First AI Storyteller with Ollama (llama3.2) and Semantic Kernel in C#

Introduction

Imagine a world where stories come alive at your command, where a friendly AI can weave enchanting tales tailored just for you. Welcome to the realm of AI storytelling with Ollama and the Semantic Kernel! In this guide, you'll embark on a journey to create your very own AI storyteller using C#. Whether you're a seasoned developer or a curious beginner, this step-by-step tutorial will empower you to harness the power of large language models and bring your imaginative narratives to life.

Steps to Follow

Step 1: Download Ollama

- Download Ollama from this link.

- Ensure your system meets the requirement of Windows 10 or later.

Step 2: Install Ollama

Execute OllamaSetup:

Once you have installed Ollama successfully, you will see the welcome message in your terminal:

Step 3: Install Semantic Kernel SDK

Install the Semantic Kernel SDK using NuGet.

Step 4: Install OllamaSharp

Again install OllamaSharp via NuGet.

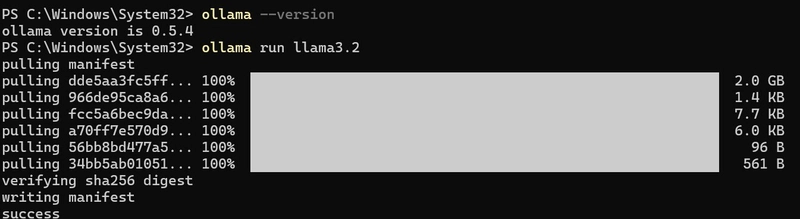

Step 5: Run the LLM

Start the LLM by executing the following command in PowerShell:

ollama run llama3.2

Ensure Ollama is running by navigating to http://localhost:11434/ in your browser:

Step 6: Write the ChatbotHelper

using System.Text;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using OllamaSharp;

using OllamaSharp.Models.Chat;

namespace MyPlaygroundApp.Utils

{

public class ChatbotHelper : IChatCompletionService

{

private readonly IOllamaApiClient ollamaApiClient;

public ChatbotHelper()

{

this.ollamaApiClient = new OllamaApiClient("http://localhost:11434");

}

public IReadOnlyDictionary<string, object?> Attributes => new Dictionary<string, object?>();

public async Task<IReadOnlyList<ChatMessageContent>> GetChatMessageContentsAsync(

ChatHistory chatHistory,

PromptExecutionSettings? executionSettings = null,

Kernel? kernel = null,

CancellationToken cancellationToken = default

)

{

var request = CreateChatRequest(chatHistory);

var content = new StringBuilder();

List<ChatResponseStream> innerContent = [];

AuthorRole? authorRole = null;

await foreach (var response in ollamaApiClient.ChatAsync(request, cancellationToken))

{

if (response == null || response.Message == null)

{

continue;

}

innerContent.Add(response);

if (response.Message.Content is not null)

{

content.Append(response.Message.Content);

}

authorRole = GetAuthorRole(response.Message.Role);

}

return

[

new ChatMessageContent

{

Role = authorRole ?? AuthorRole.Assistant,

Content = content.ToString(),

InnerContent = innerContent,

ModelId = "llama3.2"

}

];

}

public async IAsyncEnumerable<StreamingChatMessageContent> GetStreamingChatMessageContentsAsync(

ChatHistory chatHistory,

PromptExecutionSettings? executionSettings = null,

Kernel? kernel = null,

CancellationToken cancellationToken = default

)

{

var request = CreateChatRequest(chatHistory);

await foreach (var response in ollamaApiClient.ChatAsync(request, cancellationToken))

{

yield return new StreamingChatMessageContent(

role: GetAuthorRole(response.Message.Role) ?? AuthorRole.Assistant,

content: response.Message.Content,

innerContent: response,

modelId: "llama3.2"

);

}

}

private static AuthorRole? GetAuthorRole(ChatRole? role)

{

return role?.ToString().ToUpperInvariant() switch

{

"USER" => AuthorRole.User,

"ASSISTANT" => AuthorRole.Assistant,

"SYSTEM" => AuthorRole.System,

_ => null

};

}

private static ChatRequest CreateChatRequest(ChatHistory chatHistory)

{

var messages = new List<Message>();

foreach (var message in chatHistory)

{

messages.Add(

new Message

{

Role = message.Role == AuthorRole.User ? ChatRole.User : ChatRole.System,

Content = message.Content,

}

);

}

return new ChatRequest

{

Messages = messages,

Stream = true,

Model = "llama3.2"

};

}

}

}

ChatbotHelper: This class implements the IChatCompletionService interface and encapsulates the logic for interacting with the Ollama API.

OllamaApiClient: It initializes the API client to communicate with the running LLM at the specified URL.

GetChatMessageContentsAsync: This method generates a request based on the chat history and retrieves responses from the LLM.

CreateChatRequest: It creates and returns a ChatRequest object, which is sent to the LLM.

Step 7: Create the main entry point of the app

using Microsoft.SemanticKernel.ChatCompletion;

using MyPlaygroundApp.Utils;

namespace MyPlaygroundApp

{

public class Program

{

public static async Task Main()

{

var mySweetStoryteller = new ChatbotHelper();

var history = new ChatHistory();

history.AddSystemMessage("You are a nice kid storyteller that will help us a short and sweet bedtime story.");

do

{

Console.Write("Kid: ");

var userMessage = Console.ReadLine();

if (string.IsNullOrWhiteSpace(userMessage))

{

break;

}

history.AddUserMessage(userMessage);

var response = await mySweetStoryteller.GetChatMessageContentAsync(history);

Console.WriteLine($"My Sweet Storyteller: {response.Content}");

history.AddMessage(response.Role, response.Content ?? string.Empty);

}

while (true);

}

}

}

The AddSystemMessage method is part of the ChatHistory class in the context of the AI storytelling application. Here's a breakdown of its purpose and functionality:

☆ Purpose of AddSystemMessage ☆

- Context Setting: The AddSystemMessage method is used to add a predefined message that sets the context or role for the AI. It helps the AI understand the framework within which it should respond.

- Guiding Behavior: By including a system message, you can guide the AI's behavior. For example, in your code, the system message might instruct the AI to act as a "nice kid storyteller," which informs the AI to tailor its responses to be suitable for children.

Result

Kid: I want a magic story

My Sweet Storyteller: Snuggle in tight, because I've got just the thing for you!Once upon a time, in a land far, far away, there was a tiny village nestled between two great mountains. The villagers lived simple lives, tending to their gardens and animals, but they all shared one magical secret: each and every person had a special spark within them.

This spark was known as the "Moonstone," and it glowed with a soft, ethereal light that only appeared when someone was being truly kind, brave, or wise. The villagers believed that when their Moonstones shone brightly, they could perform incredible feats of magic.

One evening, a curious young girl named Luna stumbled upon an ancient book hidden deep within the village library. As she flipped through its yellowed pages, she discovered that her own Moonstone was growing stronger by the day!

Excited to unlock her true potential, Luna decided to embark on a quest to find the legendary "Moonweaver." This mystical being was said to possess the most radiant Moonstone of all, and those who found it would be granted the power to weave magical spells that could heal even the greatest wounds.

With a determined heart and a twinkle in her eye, Luna set off into the night, following a trail of glittering moonstones that led her deeper into the mountains. As she climbed higher, the air grew sweeter with the scent of blooming wildflowers, and Luna's Moonstone began to shine brighter with each step.

At last, she came upon a clearing where the most radiant Moonstone of all was suspended from a celestial branch. Luna reached out a trembling hand, and as her fingers touched the glowing orb, she felt an electric surge course through her veins.

From that moment on, Luna's Moonstone shone like a beacon in the night sky, illuminating a path for those who sought guidance, courage, or healing. And though the villagers whispered among themselves about Luna's newfound power, they knew that only one thing was true: kindness, bravery, and wisdom were always the greatest magic of all.

And so, my dear friend, it's time for you to drift off to dreamland, with your own Moonstone shining brightly within you. May its gentle glow guide you on your own magical journey, and may sweet dreams fill your heart and soul.

Conclusion

This code creates an interactive console application where a user can chat with an AI storyteller. The integration of Ollama and Semantic Kernel allows for rich, contextual interactions, making it a powerful tool for building engaging applications.

Reference: https://blog.antosubash.com/posts/ollama-with-semantic-kernel

Love C#!

Featured ones: